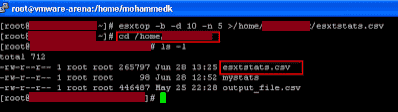

Batch mode – Statistics can be collected and output can be saved in a file (csv) and also it can be viewed & analyzed using windows perfmon & other tools in later time.

To run esxtop in batch mode and save the output file for feature analysis use the command as in in below syntax

esxtop -b -d 10 -n 5 >/home/nagu/esxtstats.csv

–d Switch is used for the number of seconds between refreshes

–n switch is the number of iterations to run the esxtop

In our above example, esxtop command will run for about 50 seconds. 10 seconds dealy* 5 iterations. redirecting the output of above esxtop stats into csv file to store in the location

/home/nagu/esxstats.csv

Once the command completed, Browse towards the location /home/nagu to see the esxtop output file “esxstats.csv”. Transfer the csv file using winscp to your windows desktop and analyze using windows perfmon or esxplot.

To run esxtop in batch mode and save the output file for feature analysis use the command as in in below syntax

esxtop -b -d 10 -n 5 >/home/nagu/esxtstats.csv

–d Switch is used for the number of seconds between refreshes

–n switch is the number of iterations to run the esxtop

In our above example, esxtop command will run for about 50 seconds. 10 seconds dealy* 5 iterations. redirecting the output of above esxtop stats into csv file to store in the location

/home/nagu/esxstats.csv

Once the command completed, Browse towards the location /home/nagu to see the esxtop output file “esxstats.csv”. Transfer the csv file using winscp to your windows desktop and analyze using windows perfmon or esxplot.